High Performance Computing for quantum many-body systems

The quantum many-body problem is exponentially hard in the number of particles. For quantum spin systems the Hilbert space dimension scales as $2^N$, where $N$ denotes the number of spins. I have been working on high-performance computing aspects of exact diagonalization by developing novel algorithms for employing conservation laws and distributed memory parallelization. The implementation of these ideas led to the first exact ground state simulation of an $N=50$ spin system.

Exact diagonalization is a fundamental and versatile technique to study

quantum many-body systems. Since it does not perform any approximations,

it can yield reliable insights if the calculations can be performed on a

system large enough to capture the essential physical phenomena. However,

due to the exponential scaling one is typically limited to a few tens of

electrons or spins. To extend the range of possible geometries I have

developed these computations towards high-performance computing during

my Ph.D. with Andreas Läuchli. There are two essential ingredients for

making these simulations possible. First, Hamiltonian symmetries like

space group symmetries and particle number conservation have to be

exploited. For this, I developed the so-called "sublattice coding algorithm"

which efficiently deals with a symmetry-adapted basis of the Hilbert

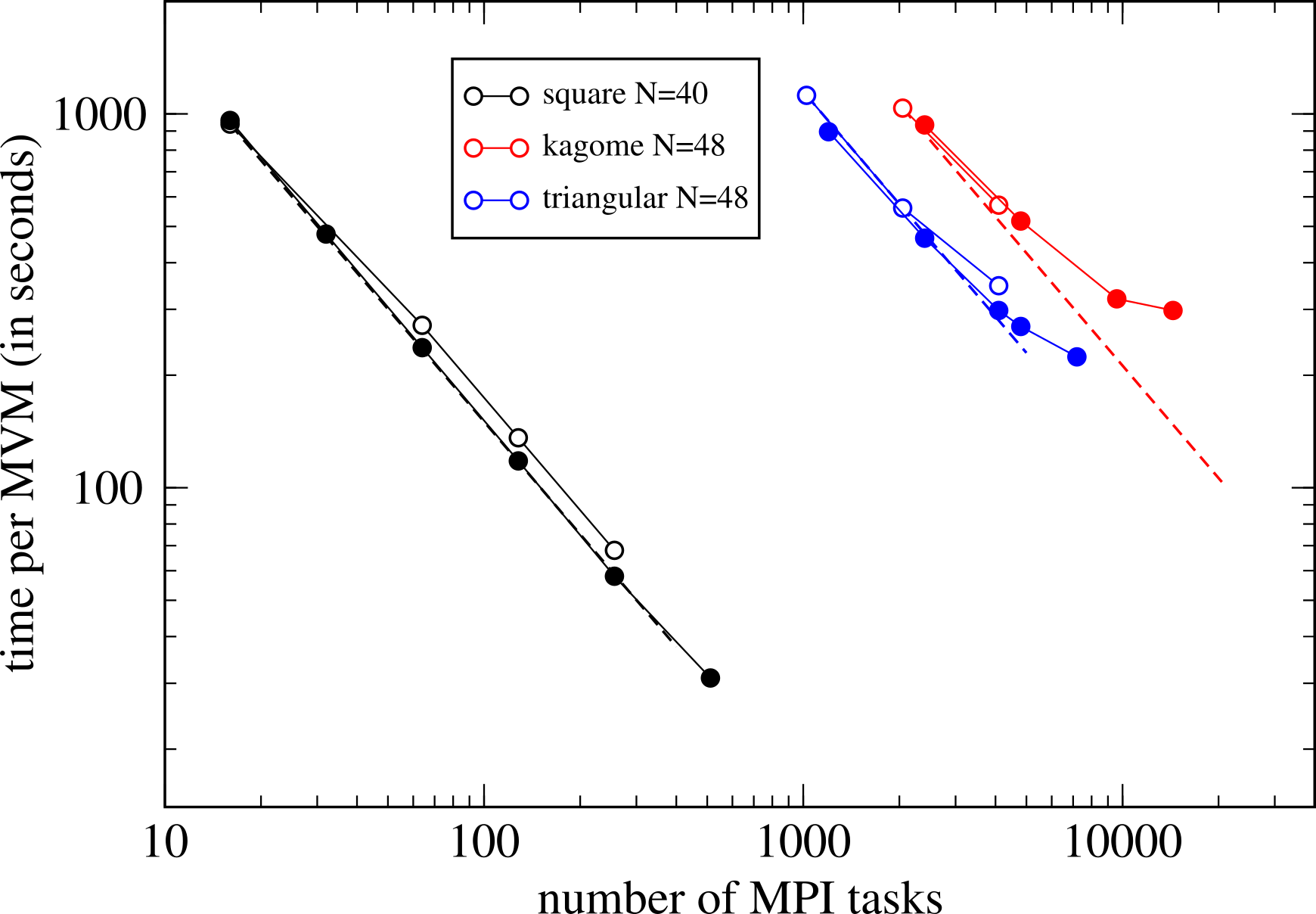

space. Second, I proposed an efficient parallelization method for

distributed memory machines by randomly distributing the basis of the

Hilbert space. More precisely, basis configurations are hashed onto

different MPI processes. These algorithms are described in detail in

Ref.